[Model Review] Markov Decision Process & Q-Learning

1. 마르코프 결정 프로세스(MDP) 바닥부터 배우는 강화학습 - 마르코프 결정 프로세스(Markov Decision Process) 마르코프 프로세스(Markov Process) 상태 S와 전이확률행렬 P로 정의됨 하나의 상태에서 다른

dnai-deny.tistory.com

Deep Reinforcement Learning

- 기존 Q Learning에서는 State와 Action에 해당하는 Q-Value를 테이블 형식으로 저장

- state space와 action space가 커지면 Q-Value를 저장하기 위해 memory와 exploration time이 증가하는 문제

Deep Q-Network(DQN)

CNN + Experience replay + Target network

- 기존 Deep Q-Learning

- 파라미터를 초기화하고 매 스텝마다 2-5를 반복

- Action \( a_t )\를 E-Greedy 방식으로 선택

- E-Greedy = policy가 정한 action을 1-E의 확률로 실행, E의 확률으로는 랜덤 action 실행

- Action \( a_t \)를 수행해서 transition \( e_t = (s_t, a_t, r_t, s_{t+1}) \)을 얻음

- Target value \( y_t = r_t + \gamma max_{a'}Q(s_{t+1}, a; \theta) \)

- Loss function \( (y_t - Q(s_t, a_t;\theta))^2 \) 를 최소화하는 방향으로 \( \theta \)를 업데이트

→ DQN에서는 transition sample을 얻는 3번에서 experience replay, 가중치 업데이트 4-5번에서 target network 적용

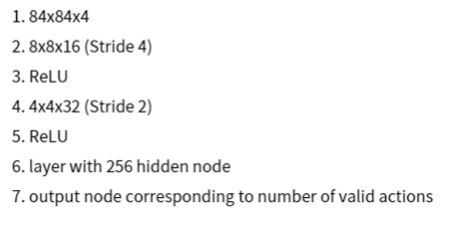

CNN Architecture

- CNN을 도입해서 인간과 비슷한 형태로 Atari 게임(벽돌깨기)의 input을 처리함

- 높은 차원의 이미지 인풋 처리에 효과적 → linear function approximator보다 성능이 좋다

- CNN 입력으로 action 제외, state만 받고 출력으로 action에 해당하는 복수 개의 Q-Value를 얻는 구조

- Q-Value 업데이트를 위해 4번 과정 계산을 할 때, max값을 찾기 위해서 여러 번 action마다 state를 통과하지 않고 한 번에 계산 가능

Experience Replay

- 매 스텝마다 추출된 샘플(액션을 수행한 결과) 를 replay memory D에 저장

- D에 저장된 샘플을 uniform 하게 랜덤 추출해서 Q-update 학습에 사용

- 현재 선택한 action을 수행해서 결과 값 + 샘플을 얻음(동일)

- 바로 평가에 사용하지 않고 지연!

1) Sample correlation

- 딥러닝에서 학습 샘플이 독립적으로 추출되었다고 가정하고 모델을 학습함.

- 그러나 강화학습에서는 연속된 샘플 사이에 의존성이 존재함 → 이전 샘플이 다음 샘플 생성에 영향을 줌.

- ⇒ replay memory D에 저장했다가 랜덤하게 추출해서 사용하면 이전의 샘플 결과가 반드시 다음 상태에 영향을 주지는 않게 됨 == 독립추출과 유사한 결과

2) Data Distribution 변화

- 모델을 정책 함수에 따라서 학습할 경우 Q-update로 정책이 바뀌게 되면 이후에 생성되는 data의 분포도 바뀔 수 있다.

- 현재 파라미터가 샘플에 영향을 주므로 편향된 샘플만 학습될 수 있음

- 좌측으로 가는 Q 값이 크면 다음에도 왼쪽으로만 가는 feed back loop 생성할 수 있음

- 이렇게 되면 학습 파라미터의 불안정성을 유발하고 이로 인해 local minimum 수렴 / 알고리즘 발산 등의 문제 발생 가능

- ⇒ replay memory에서 추출된 데이터는 시간 상의 연관성이 적다. + Q-function이 여러 action을 동시에 고려해서 업데이트(CNN)되므로 정책 함수와 training data의 분포가 급격히 편향되지 않고 smoothing됨

- → 기존 deep Q-learning의 학습 불안정성 문제 해결

Experience Replay의 장점

- Data Efficiency 증가 : 하나의 샘플을 여러번 모델 업데이트에 사용 가능

- Sample correlation 감소 : 랜덤 추출로 update variance 낮춤

- 학습 안정성 증가 : Behavior policy가 평균화 되어 파라미터 불안정성과 발산 억제

Target Network

- Target network \( \theta^- \)를 이용해서 target value \( y_t = r_t + \gamma max_{a'}Q(s_{t+1}, a; \theta) \) 계산

- Main Q-network \( \theta \) 를 이용해서 action value \( Q(s_t, a_t;\theta) \) 를 계산

- Loss function \( (y_t - Q(s_t, a_t;\theta))^2 \) 이 최소화되도록 Main Q network \( \theta \) 업데이트

- 매 C 스텝마다 target network \( \theta^-$를 main Q-network \( \theta \) 로 업데이트

- 기존 Q network를 복제해서 main Q-network와 Target network 이중 구조로 만듬

- Main Q-network : state / action을 이용해 결과인 action value Q를 얻는데 사용, 매 스텝마다 파라미터 업데이트

- Target network : 파라미터 업데이트의 기준값이 되는 target value를 얻는데 사용, 매 스텝마다 업데이트 되지 않고 C 스텝마다 파라미터가 Main Q-Network와 동기화

⇒ 움직이는 target value 문제로 인한 불안정성 개선

3) 움직이는 Target Value

- 기존 Q-Learning에서는 모델 파라미터 업데이트 방식으로 gradient descent 기반 방식 채택

- 업데이트 기준점인 target value \( y_t \) 도 \( \theta \)로 파라미터화 → action value와 target value가 동시에 움직임

- 원하는 방향으로 \( \theta \)가 업데이트 되지 않고 학습 불안정성 유발

⇒ C step 동안 target network를 이용해 target value 고정해두면 C step 동안은 원하는 방향으로 모델 업데이트 가능, C step 이후 파라미터를 동기화해서 bias 줄여줌

Preprocessing

- 2D Convolutional layer에 맞춰서 정사각형 형태의 gray scale 이미지 사용

- down sampling

- 4개 프레임을 하나의 Q-function 입력으로

Model Architecture

Model eveluation

- 최대 reward

- 최대 평균 Q value(smooth)

References

Playing Atari with Deep Reinforcement Learning

We present the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning. The model is a convolutional neural network, trained with a variant of Q-learning, whose input is raw

arxiv.org

[RL] 강화학습 알고리즘: (1) DQN (Deep Q-Network)

Google DeepMind는 2013년 NIPS, 2015년 Nature 두 번의 논문을 통해 DQN (Deep Q-Network) 알고리즘을 발표했습니다. DQN은 딥러닝과 강화학습을 결합하여 인간 수준의 높은 성능을 달성한 첫번째 알고리즘입니

ai-com.tistory.com

Playing Atari with Deep Reinforcement Learning · Pull Requests to Tomorrow

Playing Atari with Deep Reinforcement Learning 07 May 2017 | PR12, Paper, Machine Learning, Reinforcement Learning 이번 논문은 DeepMind Technologies에서 2013년 12월에 공개한 “Playing Atari with Deep Reinforcement Learning”입니다. 이

jamiekang.github.io

[논문 리뷰] Human-level Control through Deep Reinforcement Learning (DQN)

Human-level control through deep reinforcement learning | Nature 이번에는 Nature지에 발표된 DQN관련된 논문을 리뷰해보고자 한다. Playing Atari with Deep Reinforcement Learning과 거의 같은 저자들이 작성을 했는데 이는

limepencil.tistory.com

'🐬 ML & Data > 📮 Reinforcement Learning' 카테고리의 다른 글

| [강화학습] Dealing with Sparse Reward Environments - 희박한 보상 환경에서 학습하기 (2) | 2023.10.23 |

|---|---|

| [강화학습] DDPG(Deep Deterministic Policy Gradient) (0) | 2023.10.16 |

| [강화학습] Dueling Double Deep Q Learning(DDDQN / Dueling DQN / D3QN) (0) | 2023.10.06 |

| [강화학습] gym으로 강화학습 custom 환경 생성부터 Dueling DDQN 학습까지 (0) | 2023.08.16 |

| [강화학습] Markov Decision Process & Q-Learning (0) | 2023.08.01 |