이번 포스팅에서는 이전에 elasticsearch, kibana, logstash를 별도로 실행하여 PostgreSQL DB를 모니터링했던 것을 Docker을 통해서 구현해본다.

로컬 환경에서 elk를 구성했던 포스트는 아래 링크에서 확인할 수 있다.

'🐥 Web/❔ Back-end | etc.' 카테고리의 글 목록

호락호락하지 않은 개발자가 되어보자

dnai-deny.tistory.com

Docker을 사용해서 배포하면 명령어 하나로 쉽게 다른 사람의 개발환경, 버전, 설정을 복사해서 시스템을 구축할 수 있으므로, 다소 준비단계가 복잡한 ELK stack을 보다 간편하게 사용하기 위해 공부해서 구현해보기로 결정하였다. 사전 작업이 꼼꼼하게 들어가야하지만 제대로 알아두면 다음에도 할 수 있을테니까...👀

코드 전문은 여기에 올려뒀다.

GitHub - melli0505/Docker-ELK-PostgreSQL: PostgreSQL Database monitoring & indexing system with Docker ELK

PostgreSQL Database monitoring & indexing system with Docker ELK - GitHub - melli0505/Docker-ELK-PostgreSQL: PostgreSQL Database monitoring & indexing system with Docker ELK

github.com

Docker ELK 빌드하기

아래 오픈소스 구현에서 시작한다.

GitHub - deviantony/docker-elk: The Elastic stack (ELK) powered by Docker and Compose.

The Elastic stack (ELK) powered by Docker and Compose. - GitHub - deviantony/docker-elk: The Elastic stack (ELK) powered by Docker and Compose.

github.com

- setup - elastic search - kibana - logstash 순으로 실행

- 같은 network 아래에 묶임(elk - driver=bridge)1. Setup Container

1. Setup Container

- setup docker file 실행

- entrypoint

- user password

- role

- rolesfile

setup:

profiles:

- setup

build:

context: setup/

args:

ELASTIC_VERSION: 8.8.1

init: true

volumes:

- ./setup/entrypoint.sh:/entrypoint.sh:ro,Z

- ./setup/lib.sh:/lib.sh:ro,Z

- ./setup/roles:/roles:ro,Z

environment:

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD:-}

LOGSTASH_INTERNAL_PASSWORD: ${LOGSTASH_INTERNAL_PASSWORD:-}

KIBANA_SYSTEM_PASSWORD: ${KIBANA_SYSTEM_PASSWORD:-}

MONITORING_INTERNAL_PASSWORD: ${MONITORING_INTERNAL_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearchRoles & User 설정

entrypoint.sh 파일을 보면,

declare -A users_roles

users_roles=(

[logstash_internal]='logstash_writer'

[monitoring_internal]='remote_monitoring_collector'

)

# --------------------------------------------------------

# Roles declarations

declare -A roles_files

roles_files=(

[logstash_writer]='logstash_writer.json'

)

for role in "${!roles_files[@]}"; do

log "Role '$role'"

declare body_file

body_file="${BASH_SOURCE[0]%/*}/roles/${roles_files[$role]:-}"

if [[ ! -f "${body_file:-}" ]]; then

sublog "No role body found at '${body_file}', skipping"

continue

fi

sublog 'Creating/updating'

ensure_role "$role" "$(<"${body_file}")"

done./roles/하위에 있는 role 파일을 기반으로 role을 생성한다(ensure_rolecommand). 이 경우에 우리가 필요한 role은logstash-writer이다.

// roles/logstash_writer.json

{

"cluster": ["manage_index_templates", "monitor", "manage_ilm"],

"indices": [

{

"names": ["logs-generic-default", "logstash-*", "ecs-logstash-*"],

"privileges": ["write", "create", "create_index", "manage", "manage_ilm"]

},

{

"names": ["logstash", "ecs-logstash"],

"privileges": ["write", "manage"]

}

]

}- 그리고 나면 user를 생성한다.

declare -A users_passwords

users_passwords=(

[logstash_internal]="${LOGSTASH_INTERNAL_PASSWORD:-}"

[kibana_system]="${KIBANA_SYSTEM_PASSWORD:-}"

[monitoring_internal]="${MONITORING_INTERNAL_PASSWORD:-}"

)

for user in "${!users_passwords[@]}"; do

log "User '$user'"

if [[ -z "${users_passwords[$user]:-}" ]]; then

sublog 'No password defined, skipping'

continue

fi

declare -i user_exists=0

user_exists="$(check_user_exists "$user")"

if ((user_exists)); then

sublog 'User exists, setting password'

set_user_password "$user" "${users_passwords[$user]}"

else

if [[ -z "${users_roles[$user]:-}" ]]; then

suberr ' No role defined, skipping creation'

continue

fi

sublog 'User does not exist, creating'

create_user "$user" "${users_passwords[$user]}" "${users_roles[$user]}"

fi

donecreate_usercommand를 통해 사전 정의된 user들을 생성한다. password 등은.env파일 안에 있다.

2. Elasticsearch Container

- 한 번 실행하고 나면

config/certs폴더 아래에 인증서 생성됨. 이 인증서를logstash/config/certs아래 똑같이 붙여넣어줘야 permission 생김

elasticsearch:

build:

context: elasticsearch/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

volumes:

- ./elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml:ro,Z

- elasticsearch:/usr/share/elasticsearch/data:Z

ports:

- 9200:9200

- 9300:9300

environment:

node.name: elasticsearch

ES_JAVA_OPTS: Xms512m -Xmx512m

# Bootstrap password.

# Used to initialize the keystore during the initial startup of

# Elasticsearch. Ignored on subsequent runs.

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD:-}

# Use single node discovery in order to disable production mode and avoid bootstrap checks.

# see: https://www.elastic.co/guide/en/elasticsearch/reference/current/bootstrap-checks.html

discovery.type: single-node

networks:

- elk

restart: unless-stopped3. Kibana Container

- 따로 건드릴 것 없음

kibana:

build:

context: kibana/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

volumes:

- ./kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml:ro,Z

- ./kibana/ca_1688430453313.crt:/usr/share/kibana/certs/ca_1688430453313.crt

ports:

- 5601:5601

environment:

KIBANA_SYSTEM_PASSWORD: ${KIBANA_SYSTEM_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearch

restart: unless-stopped4. Logstash Container

logstash:

build:

context: logstash/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

volumes:

- ./logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro,Z

- ./logstash/config:/usr/share/logstash/config:ro,Z

- ./logstash/pipeline:/usr/share/logstash/pipeline:ro,Z

ports:

- 5044:5044

- 50000:50000/tcp

- 50000:50000/udp

- 9600:9600

environment:

LS_JAVA_OPTS: -Xms256m -Xmx256m

LOGSTASH_INTERNAL_PASSWORD: ${LOGSTASH_INTERNAL_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearch

restart: unless-stopped- 이 설정이 기본

- logstash를 어떻게 사용하느냐에 따라서 추가적인 세팅이 필요함

https://github.com/elastic/logstash

GitHub - elastic/logstash: Logstash - transport and process your logs, events, or other data

Logstash - transport and process your logs, events, or other data - GitHub - elastic/logstash: Logstash - transport and process your logs, events, or other data

github.com

- config 내부에 필요한 파일

- logstash.yml

- jvm.options (위 깃헙에 포함됨)

- log4j2.properties (위 깃헙에 포함됨)

- certs

Docker ELK와 PostgreSQL 연동하기

1. PostgreSQL JDBC Driver mount

docker-compose.yml의 logstash container volumn에 jdbc driver 파일을 마운트한다- read only로 마운트하면 안됨!

./logstash/config/postgresql-42.6.0.jar:/usr/share/logstash/logstash-core/lib/jars/postgresql-42.6.0.jar- 경로 뒤에 :ro를 붙이면 read only, :z를 붙이면 컨테이너간 공유된다.

2. logstash pipeline configuration 파일 수정

기존 로컬 환경에서 elk를 실행할 때 작성했던 내용이 아래와 같다.

input {

jdbc {

jdbc_driver_library => "./logstash/logstash-core/lib/jars/postgresql-42.6.0.jar"

jdbc_driver_class => "org.postgresql.Driver"

jdbc_connection_string => "jdbc:postgresql://localhost:5432/searching"

jdbc_user => "{USERNAME}"

jdbc_password => "{PASSWORD}"

jdbc_fetch_size => 2

schedule => "* * * * *"

statement => "select * from contents WHERE id > :sql_last_value ORDER BY id ASC"

last_run_metadata_path => "./logstash/data/plugins/inputs/jdbc/logstash_jdbc_last_run"

use_column_value => true

tracking_column_type => "numeric"

tracking_column => "id"

type => "data"

}

}

output {

elasticsearch {

hosts => ["https://localhost:9200"]

cacert => './logstash/config/certs/http_ca.crt'

ssl => true

user => "logstash_internal"

password => "x-pack-test-password"

index => "contents"

}

}수정해야할 부분은 아래와 같다.

- 도커 컨테이너 내부 경로로 수정

- postgresql과 elasticsearch 주소 변경

- index template 삽입

Docker Container 경로로 수정

input {

jdbc {

jdbc_driver_library => "/usr/share/logstash/logstash-core/lib/jars/postgresql-42.6.0.jar" # here

jdbc_driver_class => "org.postgresql.Driver"

jdbc_connection_string => "jdbc:postgresql://localhost:5432/searching"

jdbc_user => "{USERNAME}"

jdbc_password => "{PASSWORD}"

jdbc_fetch_size => 2

schedule => "* * * * *"

statement => "select * from contents WHERE id > :sql_last_value ORDER BY id ASC"

last_run_metadata_path => "/usr/share/logstash/jdbc_last_run/logstash_jdbc_last_run_keyword" # here

use_column_value => true

tracking_column_type => "numeric"

tracking_column => "id"

type => "data"

}

}

output {

elasticsearch {

hosts => ["https://localhost:9200"]

cacert => '/usr/share/logstash/config/certs/http_ca.crt' # here

ssl => true

user => "logstash_internal"

password => "x-pack-test-password"

index => "contents"

}

}- logstash container는

/usr/share/하위에logstash폴더를 생성하고 아래에 파일시스템이 구성된다. docker-compose.yml파일에서 마운트 했던 경로와 같이 써주면 된다.jdbc_last_run폴더도 새로 붙여준다.

# docker-compose.yml

volumes:

- ./logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro,Z

- ./logstash/pipeline:/usr/share/logstash/pipeline:ro,Z

- ./logstash/config:/usr/share/logstash/config:ro,Z

# new

- ./logstash/config/postgresql-42.6.0.jar:/usr/share/logstash/logstash-core/lib/jars/postgresql-42.6.0.jar

- ./logstash/jdbc_last_run:/usr/share/logstash/jdbc_last_runpostgresql과 elasticsearch 주소 변경

- docker compose가 DB와 같은 컴퓨터에 있지 않다는 가정 하에 postgreSQL 접속 주소를 IP로 변경해주어야한다.

# pipeline.conf

...

jdbc_connection_string => "jdbc:postgresql://{IP ADDRESS}:5432/{SERVER NAME}"- elasticsearch도 localhost 대신 도커 내부에 설정한 elk 네트워크 선을 통해서 컨테이너 단위로 접근할 수 있다(이거 틀렸을 수도 있음)

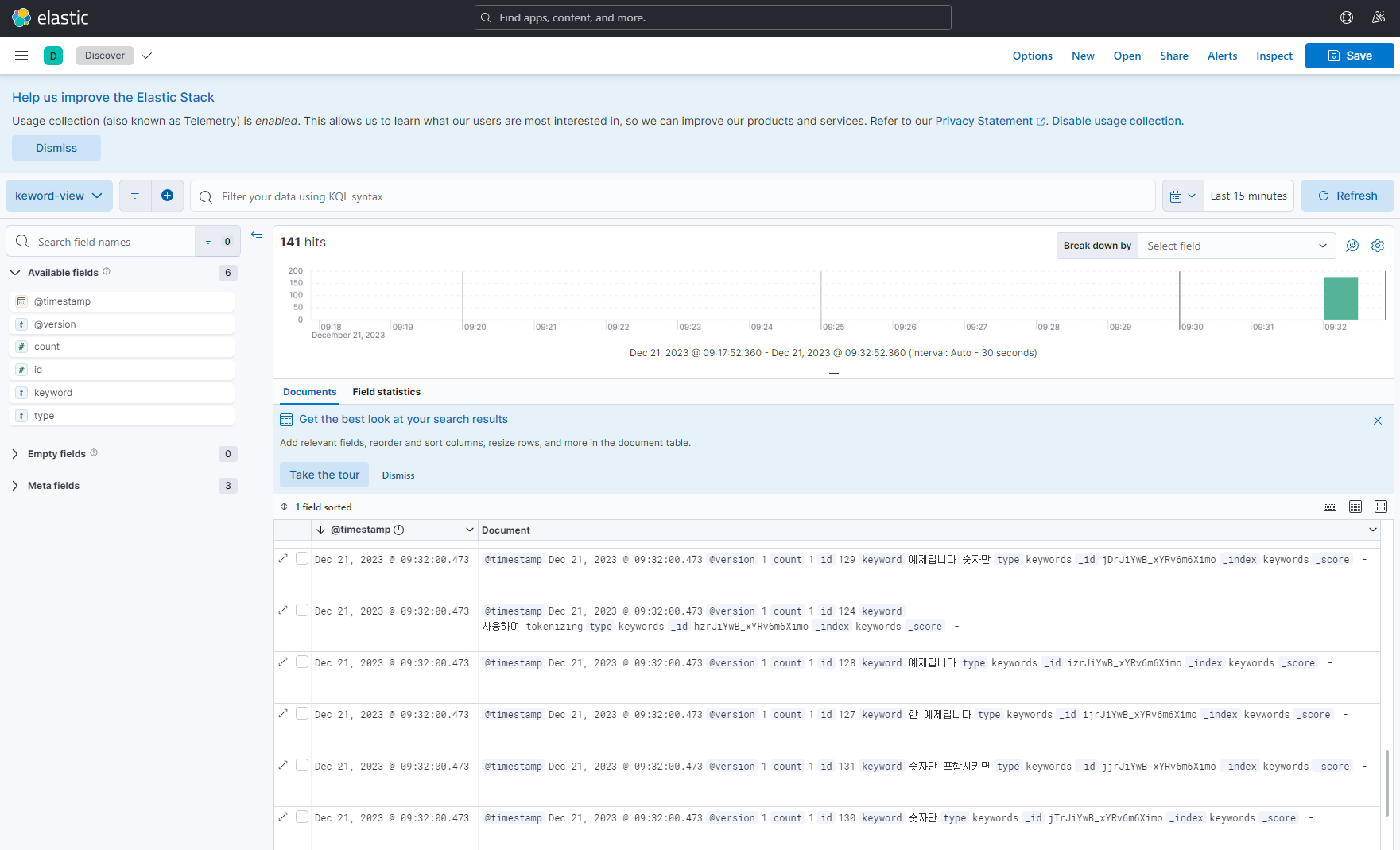

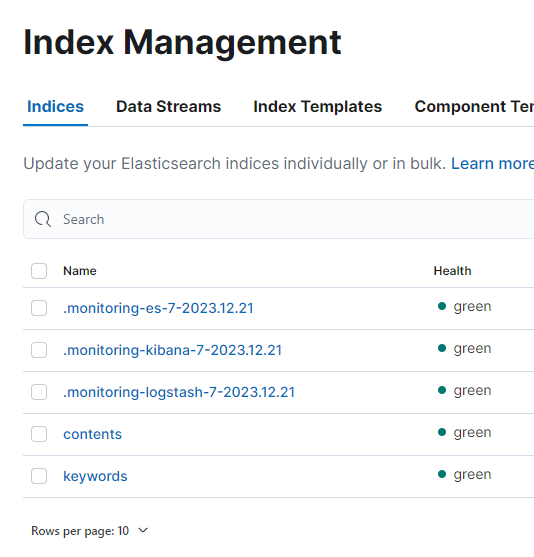

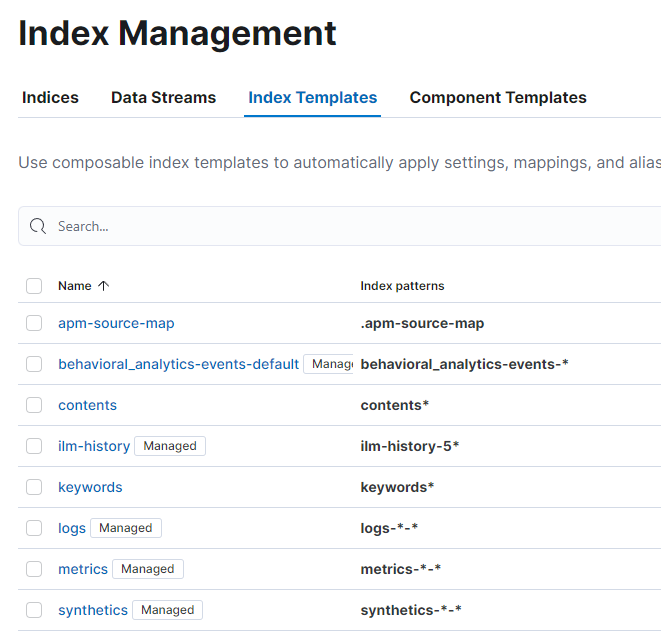

### index template 삽입

- elasticsearch - kibana - logstash를 따로 실행시키던 때에는 kibana에서 index template을 먼저 설정한 뒤에 logstash를 동작하면 됐지만, 지금은 한 번에 다다닥 실행되기 때문에 미리 json 형태로 작성해서 설정해주는 것이 좋다.

- *elasitcsearch 7.8.x 버전 이상을 사용할 경우 composable index로 index template 구성이 변경되었음. 형식이 다르므로 주의하기*

- 아래는 예시로 작성된 index template

```json

// templates/content_template.json

{

"index_patterns": ["contents*"],

"template": {

"settings": {

"analysis": {

"filter": {

"custom_shingle_filter": {

"max_shingle_size": "3",

"min_shingle_size": "2",

"type": "shingle"

}

},

"char_filter": {

"default_character_filter": {

"type": "html_strip"

}

},

"analyzer": {

"whitespace_analyzer": {

"filter": ["lowercase", "word_delimiter", "custom_shingle_filter"],

"char_filter": ["html_strip"],

"type": "custom",

"tokenizer": "whitespace"

},

"nori_analyzer": {

"filter": ["lowercase"],

"char_filter": ["html_strip"],

"type": "custom",

"tokenizer": "korean_nori_tokenizer"

}

},

"tokenizer": {

"korean_nori_tokenizer": {

"type": "nori_tokenizer",

"decompound_mode": "mixed"

},

"custom-edge-ngram": {

"token_chars": ["letter", "digit"],

"min_gram": "1",

"type": "edge_ngram",

"max_gram": "10"

}

}

},

"number_of_shards": "48",

"number_of_replicas": "0"

},

"mappings": {

"properties": {

"content": {

"type": "text",

"fields": {

"search": {

"type": "search_as_you_type",

"doc_values": false,

"max_shingle_size": 3,

"analyzer": "whitespace_analyzer",

"search_analyzer": "whitespace_analyzer",

"search_quote_analyzer": "standard"

}

},

"analyzer": "nori_analyzer",

"search_analyzer": "nori_analyzer",

"search_quote_analyzer": "standard"

},

"id": {

"type": "long",

"ignore_malformed": false,

"coerce": true

},

"title": {

"type": "text",

"fields": {

"search": {

"type": "search_as_you_type",

"doc_values": false,

"max_shingle_size": 3,

"analyzer": "whitespace_analyzer",

"search_analyzer": "whitespace_analyzer",

"search_quote_analyzer": "standard"

}

},

"analyzer": "nori_analyzer",

"search_analyzer": "nori_analyzer",

"search_quote_analyzer": "standard"

},

}

},

"aliases": {}

}

}- 그리고 작성한 템플릿을 docker compose에서 volumn에 마운팅하기

... - ./logstash/templates:/usr/share/logstash/templates

- `pipeline.conf` 파일에 인덱스 템플릿 추가하기

```conf

output{

...

index => "contents"

manage_template => true

template => "/usr/share/logstash/templates/content_template.json"

template_name => "contents"

template_overwrite => true

}3. Upload

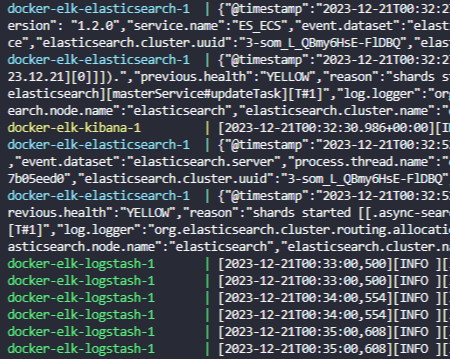

$ docker-compose up

Common Issue

No Available connections

docker-elk-logstash-1 | [2023-12-19T07:33:09,096][ERROR][logstash.licensechecker.licensereader] Unable to retrieve license information from license server {:message=>"No Available connections"}

docker-elk-logstash-1 | [2023-12-19T07:33:09,103][ERROR][logstash.monitoring.internalpipelinesource] Failed to fetch X-Pack information from Elasticsearch. This is likely due to failure to reach a live Elasticsearch cluster.=> 인증서 문제 - elasticsearch/config/certs 를 복사해서 logstash/config/certs로 넣고 volumn 마운팅해서 해결하기

+) logstash ssl 이 true로 설정되지 않았나 확인. false로 되어야 제대로 동작함

jdbc driver - class not found

docker-elk-logstash-1 | [2023-12-19T08:15:49,228][ERROR][logstash.javapipeline ][postgresql] Pipeline error {

:pipeline_id=>"postgresql",

:exception=>

#<LogStash::PluginLoadingError:

#<Java::JavaLang::ClassNotFoundException: org.postgresql.Driver>. Are you sure you've included the correct jdbc driver in :jdbc_driver_library?>, - jdbc driver가 안 맞는다 어쩌구~

- docker compose에서 volume 마운팅 다시하기. read only로 설정하면 오류 발생하므로 주의

# docker-compose.yml logstash: ... volumes: ... - ./logstash/config/postgresql-42.6.0.jar:/usr/share/logstash/logstash-core/lib/jars/postgresql-42.6.0.jar

jdbc last run permission denied

- 아무래도 read only로 설정된 폴더 하위에 있어서 그런 것 같음

- 따로 폴더 빼서 마운트 해해주고 해결됨

- ./logstash/jdbc_last_run:/usr/share/logstash/jdbc_last_run - elasticsearch version 7.8 이상에서부터 index template을 composable index에 따라서 지정하도록 해서 안 먹었다.

- 인덱스 템플릿 형식 다시 짜줬더니 됨

Failed to install template {:message=>"Got response code '400' contacting Elasticsearch at URL 'http://elasticsearch:9200/_index_template/content-template'",

'🐳 Docker & Kubernetes' 카테고리의 다른 글

| [Docker] Docker 입문 - Docker Image, Container, 버전 관리와 moniwiki 배포 예제까지 (1) | 2023.04.27 |

|---|---|

| [Docker] Anaconda/Jupyter notebook을 dockerfile로 설치 + 커스텀 이미지 만들기 (0) | 2022.08.19 |